By Erinne Ong

When AI systems generate insights in a matter of seconds, the magic behind the algorithm is often left unexplained. Known as the black box of AI, explaining the inner workings of these technologies into something that makes sense and that we can trust is a perennial challenge.

But with AI now adopted for applications like medical diagnosis and credit scoring, these models not only handle sensitive data but also guide crucial decision-making. Given the huge sway that AI-powered tech can have over our lives, experts and authorities are realising the need to look inside the box to ensure safety, accountability, fairness and—crucially—trust.

To this end, thought leaders and industry experts from the East and West convened at Singapore’s ATxAI Conference on 14 July 2021. The conference—co-organised by the Infocomm Media Development Authority (IMDA), Personal Data Protection Commission (PDPC) and National University of Singapore (NUS)—discussed the strategic value of AI governance, technology trends and showcased real-life implementations.

Building a foundation of digital trust

On local shores, the infocomm media sector proved an economic pillar during the pandemic, growing by 4.8 percent in 2020. But a foundation of trust is increasingly vital for digital developments to advance further, said Minister for Communications and Information Josephine Teo in her opening remarks.

From data breaches to stolen identities, the digital realm may come with hidden risks. Recognising escalating wariness, Minister Teo announced the government’s commitment of S$50 million dollars to boost Singapore’s digital trust capabilities over the next five years.

We want to foster an environment where businesses and consumers feel safe and confident about using digital technologies.

Mrs Josephine Teo

Minister for Communications and Information of Singapore

On its part, IMDA has previously released a Model AI Governance Framework that translates high level principles into implementable measures for companies to adopt. “Together with industry players, we have developed practical guidelines on how to develop and deploy AI responsibly,” Minister Teo shared, with certification programmes also in the works to validate AI systems objectively and aid in achieving transparent AI.

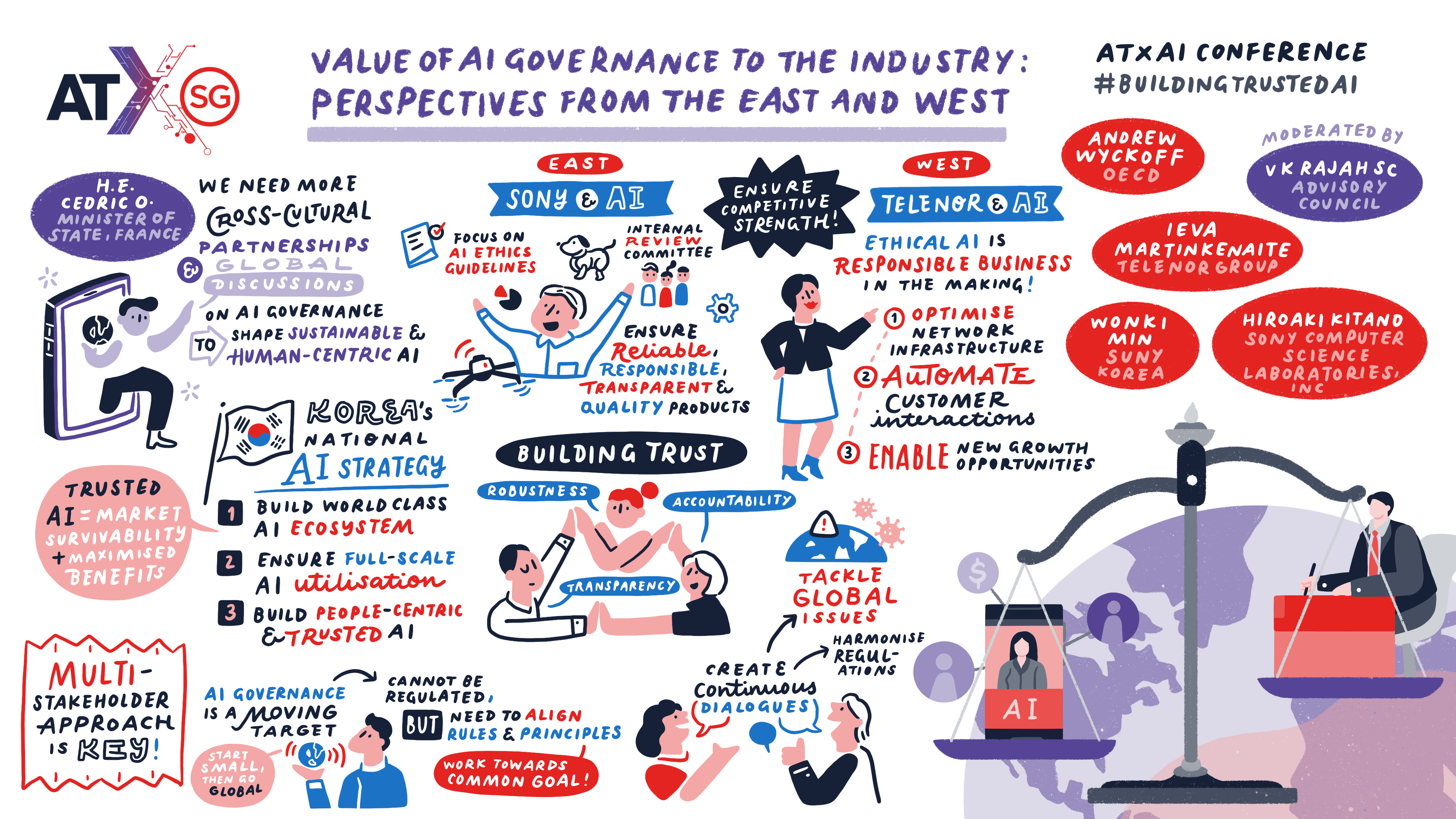

Around the world, AI governance is already proving valuable for the industry. In a discussion moderated by Mr V.K. Rajah SC, Chairman of Singapore’s Advisory Council on the Ethical Use of AI and Data, panellists from across the world discussed the strategic value of AI governance. They shared that AI ethics guidelines are key to delivering reliable products, achieving market sustainability and leading a responsible business. As AI governance is a moving target, continuous and global dialogues will help align guidelines for multiple stakeholders, allowing diverse communities to work together towards human-centric AI.

The panellists also agreed that it is critical for trust in AI to be maintained, and the time spent on building up this trust is not a liability.

Explainability and ensuring robustness are key in building trustworthy AI

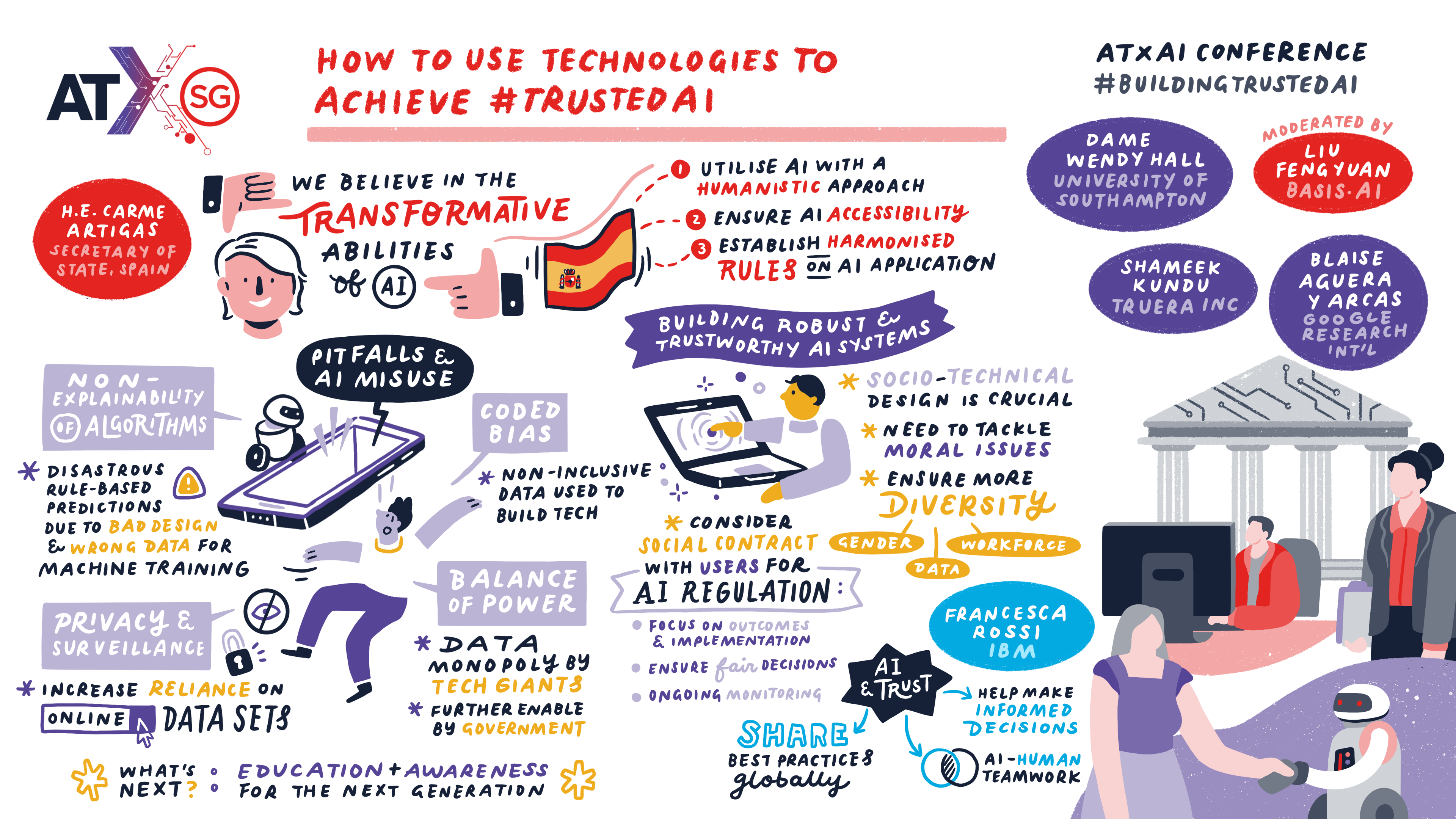

Given the growing attention placed on building trustworthy AI, another panel discussion moderated by Mr Liu Feng-Yuan, Co-founder and CEO of IMDA-accredited AI company Basis.AI, turned the spotlight on the common pitfalls and important principles. Explainability, for example, involves understanding how AI systems use data to arrive at certain decisions.

For Mr Shameek Kundu, Head of Financial Services and Chief Strategy Officer at Truera Inc, satisfying the customer-facing aspect of explainability is a balancing act between the technical nitty-gritty of the AI model and a more surface-level gist with actionable, interpretable information.

The approach to AI regulation is to not necessarily worry about the details of the technology you're using because that might evolve over time. It's to worry about the outcomes. You can explain to individuals and companies just like you would have done if the decision wasn't done using AI.

Mr Shameek Kundu

Head of Financial Services and Chief Strategy Officer, Truera Inc

Another key aspect is to ensure AI is robust against misuse. To this end, Google Research Engineering Fellow Mr Blaise Aguera Y Arcas shared that Google has been developing more decentralised ways of performing AI, such as federated learning, to decrease such risks. But beyond technical solutions, he emphasised the need for collective effort.

Being able to regulate AI and have certainty that a technical system is built in a secure way requires the whole industry to step up with much higher standards of what private, secure and confidential computing mean.

Mr Blaise Aguera y Arcas

Google Research Engineering Fellow

Uncovering hidden biases in AI

Meanwhile, in non-transparent models, AI misuse can stem from coded bias and poor choice of data. Dame Wendy Hall, Professor at the University of Southampton, cited the example of a facial recognition system trained using images of only a certain ethnicity. In the end, the AI model couldn’t identify people from other demographics, rendering the technology not only biased but also ineffective.

In other cases, the biased outcomes may not be due to the code itself. When Mr Blaise Aguera y Arcas once searched up the term “physicist” in Google, the first 100 results all pulled up pictures of men. “Regardless of the mix of genders in the beginning, Google uses feedback in order to rank the results,” he explained. This then posed a dilemma of whether companies should intervene and increase the representation of women to a 50-50 split, even if this may not reflect the real-life ratio of 20 to 30 percent women in the field.

The panellists agreed that such problems of AI were not new. More often, people presupposed that fairness and bias existed in the algorithms, when in reality, these biases already exist in the real world and the algorithms merely reflect and reinforce them. It is therefore a social-technical problem that requires different stakeholders to come together to address, and not by an individual player in the AI ecosystem.

This is especially important in a dynamic world wherein the goalposts of what constituted fairness are constantly shifting. To navigate thorny issues like these, the panellists highlighted that diversity is key to designing responsible and trusted systems. It would be equally important to put diverse human perspectives at the heart of discussions around ethical AI and educate the next generation on how to think about such issues.

“Only by including all the voices, we can understand how to identify and address the AI ethics concerns,” added Dr Francesca Rossi, IBM’s global leader for AI ethics, a multidisciplinary field with the goal of maximising positive impact while minimising harm and risk to the society.

From academia to industry to even the next generation, all panellists ultimately agreed that defining and executing the responsible and human-centric deployment of AI is a whole-of-community effort. “We as human beings have to get involved in this debate. I believe firmly in education and awareness across the board,” concluded Dame Wendy Hall.

Held from 13th to 16th July 2021, Asia Tech x Singapore brought together thought leaders in business, tech and government to discuss the trends, challenges and growth opportunities of the digital economy and how to shape the digital future.

.webp)